Norrsken

lördag 28 maj 2016

Faking Fullscreen!

As previously mentioned in "Compute shader and migrating to Monstro" we have had problems running the standalone executable in fullscreen. We figured now is the time to fix this problem as it really lowers the quality of a project which we otherwise are very happy with.

We were on track working towards the solution when we tried to fix this problem earlier. When the scripts start executing it forces the window to set a size of 4096 x 2400 which is the resolution for Monstro's desktop. The program is the running a windowed version which is bordeless, which makes it look to be fullscreen. What we also had to do was to set the spawning position of the window, which is at position (0, 0) in the leftmost desktop. To do this, we had to use a script that detected the name of the window (our project's name) and then execute the commands to move the window through that script.

We were on track working towards the solution when we tried to fix this problem earlier. When the scripts start executing it forces the window to set a size of 4096 x 2400 which is the resolution for Monstro's desktop. The program is the running a windowed version which is bordeless, which makes it look to be fullscreen. What we also had to do was to set the spawning position of the window, which is at position (0, 0) in the leftmost desktop. To do this, we had to use a script that detected the name of the window (our project's name) and then execute the commands to move the window through that script.

Introducing: Northern Lights

So. At this point in the project basically there has lately been a lot of tweaking. What we realised when playing around with different settings and so on is that the particle system sort of resembles northern lights if we just were to edit the colors a bit.. so we did! And we also decided to change the name of the project at this point. We went from CyclooPS to just "Norrsken" (Northern Lights in Swedish). We also added some nice creative commons spacey music.

The best thing to do here is probably just to post a bunch of videos:

The best thing to do here is probably just to post a bunch of videos:

1: Playing around with the hands' strength. Here the pull of the hand is pretty low which allows particles to escape. Creates this kind of effect:

2: Putting up some signs on the screen and just observing the patterns that appear:

3: A bit more of the same stuff...:

And that's that.

Fixing a crucial bug with particles flying away with extreme speed

The thing about Newtonian gravitational physics is that when we put it into a system like this, it will create some odd behaviour.

The most pressing problem for us was that somehow we realized (by using the system for a long time) that particles started disappearing. We needed to find out why.

After some digging in the code we realised that if the particles, in one frame, is extremely close to a hand, it's speed will be (by the laws of physics) be divided by 1/r squared. What this means is that if the distance between the hand and the particle is, for example, 0.000001, the particle will get a speed that easily surpasses light speed. That means, in our world, that the particle never ever will be seen again, as it has flown far, far away...

The solution required multiple edits to the "physical laws" of our system.

First, if the distance that is about to be calculated is lower than 0.01, we set it to 0.01. This essentialy is a speed limitation.

Second, if the particle flies outside the screen, not only does it stop, but its coordinate is moved back to the edge (e.g. the particle moves to x = 900, but max of x (the edge) is 105, so we set it to 105).

Third, If a particle reaches an extremely high velocity, we gradually reduce its speed (deceleration) by a factor of 0.9 each frame to a linear max velocity that we have set. This third solution actually is not really a requirement, but rather something that creates a really aesthetically pleasing effect behind the hands' interaction. Check out this video:

Particle behavior, physics, interaction and restrictions

When we finally had a beta version of the particle system up and running we continued working on changing the behavior of the particles movement. This step in the process is highly dependent on the touch interaction working correctly so the feedback upon touching the screen is perceived as expected.

Touching the screen and physics

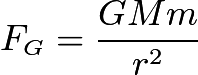

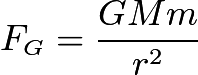

A hand touching the screen is supposed to simulate the placement of a gravity source at the location of the hand. This means that every particle is going to be affected by a certain force depending on its position relative to the hand touching the screen. All the calculations are based on Newton's law of universal gravitation, in order to get real physics behavior. The following formula can be used to describe the force which affects each particle. G is a gravitational constant, M and m are the masses of the two different objects, i.e. the gravity source (a touching hand) and a particle. r is the distance between these two. All these variables result in the force Fg.

Touching the screen with one hand will thus add a force directed from each particle towards the position of the hand. This will alter the velocity and direction in which the particle was previously heading, for each rendered frame. Particles which are far away from the hand will not be affected nearly as much as those closer to the hand, due to the division by the distance squared.

Once there is more than one hand touching the screen, this calculation has to be performed for each hand, on all the particles. The forces can then be sumarized to get the final force to change each particle's direction and velocity. Hence, there are a ton of calculations being done in order to render each frame, which is one of the main reasons we wanted to use the GPU in the first place.

One thing to note here is that the particles do not add any force to all the other particles in the scene, even though the formula states that they clearly would. However we did not like that idea, and only wanted the user interaction to be what changes the state of the scene. To further motivate why the particles do not affect each other it could simply be for the reason that their masses are too insignificant to result in any force big enough to be worth taking into account.

Restrictions

A couple of problems occured while implementing everything mentioned above.

Touching the screen and physics

A hand touching the screen is supposed to simulate the placement of a gravity source at the location of the hand. This means that every particle is going to be affected by a certain force depending on its position relative to the hand touching the screen. All the calculations are based on Newton's law of universal gravitation, in order to get real physics behavior. The following formula can be used to describe the force which affects each particle. G is a gravitational constant, M and m are the masses of the two different objects, i.e. the gravity source (a touching hand) and a particle. r is the distance between these two. All these variables result in the force Fg.

Touching the screen with one hand will thus add a force directed from each particle towards the position of the hand. This will alter the velocity and direction in which the particle was previously heading, for each rendered frame. Particles which are far away from the hand will not be affected nearly as much as those closer to the hand, due to the division by the distance squared.

Once there is more than one hand touching the screen, this calculation has to be performed for each hand, on all the particles. The forces can then be sumarized to get the final force to change each particle's direction and velocity. Hence, there are a ton of calculations being done in order to render each frame, which is one of the main reasons we wanted to use the GPU in the first place.

One thing to note here is that the particles do not add any force to all the other particles in the scene, even though the formula states that they clearly would. However we did not like that idea, and only wanted the user interaction to be what changes the state of the scene. To further motivate why the particles do not affect each other it could simply be for the reason that their masses are too insignificant to result in any force big enough to be worth taking into account.

Restrictions

A couple of problems occured while implementing everything mentioned above.

- What happens if a particle reaches one of the screen's edges?

- What behavior do we need to implement for those cases?

- Do we need a bounding box?

- Should all edges have the same behavior?

The first thing we realized is that we have to set a bounding box. There is no point of having particles outside of the visible parts of the scene, and if a particle would travel too far away, it would be very difficult to pull it back into frame due to the large distance it could build up.

Particles without a bounding box

Thus, we set limitations for all edges. Any particle exceeding the limit would have its position capped and its velocity set to zero depending on which axis it would try to exceed. For instance, if a particle is trying to escape on the left side of the screen, i.e. on the negative side of the x-axis, it will have its x-velocity set to zero, while the y-velocity remains the same. Thus the particle will slide along the edge of the screen until it reaches a corner, where the same thing would happen to the y-velocity.

This means particles trying to exit the scene stick onto the "walls" and corners. When being attracted back towards the center of the screen, the particles form cool looking shapes which we thought was an amazing feature.

Stopping particles which go too far

One exception is the upper edge of the screen. In this case we did not want the particles to stick to the edge, because it is hard to reach the top of the screen due to its height. Thus those particles stuck at the top are having a hard time getting back into the action. Therefore, we set the top edge to bounce the particles back down, simply by reversing the y-velocity and scaling it down by a factor 10 in order to maintain smooth movement and prevent chaos.

Bouncing particles back from the wall

Pacticles appear at the other side (experimental feature, not implemented!)

Sticky walls and bouncy top (final version)

Multitouch working with the Particle System

As the touch wasn't really working at all this was the next step.

The problem was actually a combination of a couple of things. Firstly, for some reason, the GPU does not want to for loop (despite our best efforts with settings etc) over our array of hand data. This meant that we in the end decided to make each hand a separate variable that is sent to the GPU. Apart from that, there were some data type issues which occured when moving the hand data to the GPU. In the end, a C# Vec2 can be sent to a recieving variable of type float2 in the shader - this might be good to know for someone trying to do something similar to us.

The problem was actually a combination of a couple of things. Firstly, for some reason, the GPU does not want to for loop (despite our best efforts with settings etc) over our array of hand data. This meant that we in the end decided to make each hand a separate variable that is sent to the GPU. Apart from that, there were some data type issues which occured when moving the hand data to the GPU. In the end, a C# Vec2 can be sent to a recieving variable of type float2 in the shader - this might be good to know for someone trying to do something similar to us.

While there probably is a better solution (regarding not looping over hands but rather going over them one by one in a really ugly piece of code repetition), we decided to leave this as well for now as we actually got stuff working. Here's the video:

Touch interaction not working as intended

As promised in the last post, here is a video of the particle system with hand interaction that is sort of not working, but at least doing something!

We want the hands to act as "gravity sources" or planets that affect the particles with newtonian physics. Therefore we now have to work a lot on actually getting the hand data in the correct way to the shader. Probably the problems occur because of a restriction in the shader or just some small error that we just haven't found yet.

We want the hands to act as "gravity sources" or planets that affect the particles with newtonian physics. Therefore we now have to work a lot on actually getting the hand data in the correct way to the shader. Probably the problems occur because of a restriction in the shader or just some small error that we just haven't found yet.

Prenumerera på:

Kommentarer (Atom)